✨ Introduction

AI is transforming the way we build and deploy applications, and cloud technology makes it easier than ever to scale. At AiBlogQuest.com, we simplify complex AI concepts so developers and creators can take action. In this beginner-friendly guide, you’ll learn the step-by-step process of deploying AI models in the cloud, the tools you need, and best practices to ensure smooth scaling.

🛠️ What Does Deploying AI Models in the Cloud Mean?

Deploying AI models in the cloud means taking your trained machine learning or deep learning model and making it available for real-world use via cloud platforms like AWS, Google Cloud, or Azure. Instead of running locally, your model is hosted on powerful cloud servers that handle requests, scale automatically, and ensure global accessibility.

📌 Benefits of Cloud Deployment for AI Models

-

🌍 Global Access: Users anywhere can interact with your model.

-

⚡ Scalability: Automatically scale based on demand.

-

💰 Cost-Efficient: Pay-as-you-go pricing keeps costs manageable.

-

🔒 Security: Built-in tools for data encryption and compliance.

-

🔄 Continuous Updates: Update models without downtime.

🔑 Steps to Deploy AI Models in the Cloud

1. ✅ Train and Save Your Model

First, train your AI model locally using frameworks like TensorFlow, PyTorch, or Scikit-learn. Save the trained model in a standard format (.h5, .pt, .pkl).

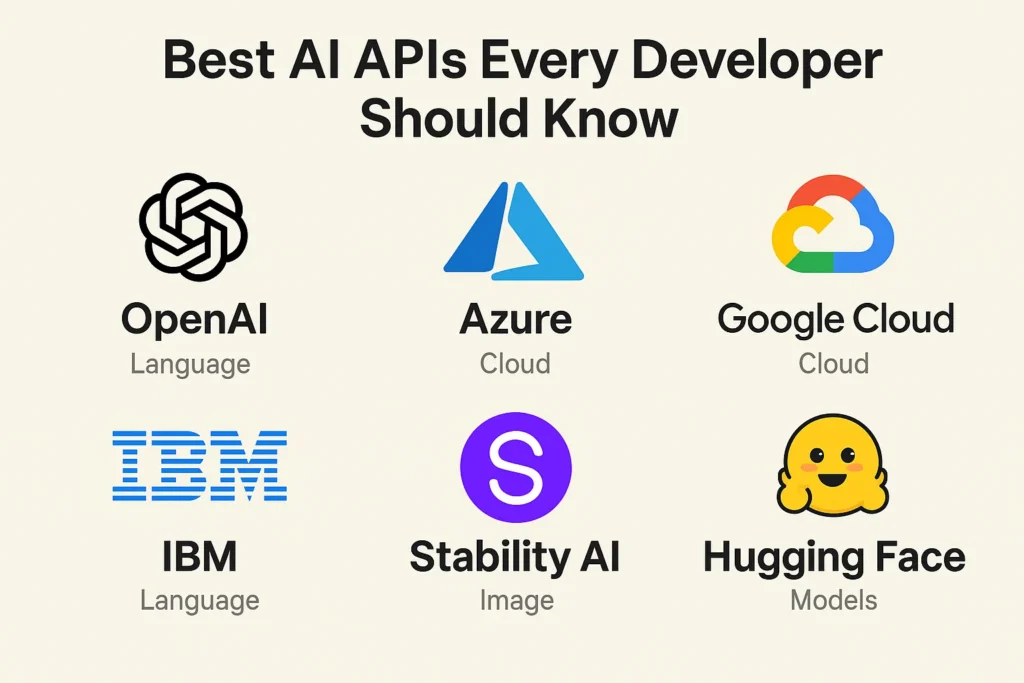

2. 📂 Choose a Cloud Provider

Select a cloud platform based on budget, familiarity, and ecosystem.

-

AWS (SageMaker) → great for enterprise scaling.

-

Google Cloud AI Platform → ideal for TensorFlow users.

-

Microsoft Azure ML → flexible integrations.

3. 🖥️ Set Up a Virtual Environment

Create a cloud instance (like AWS EC2, Google Compute Engine, or Azure VM) to host your app.

4. ⚙️ Containerize Your Model

Use Docker to package your model with dependencies. This ensures it works consistently across environments.

5. 🌐 Deploy via API Endpoint

Use frameworks like FastAPI or Flask to expose your model as an API. Users can send input requests and get predictions back.

6. 📊 Monitor Performance

Set up monitoring tools (Grafana, Prometheus, or built-in dashboards) to track latency, uptime, and errors.

7. 🔄 Scale with Demand

Leverage auto-scaling features in your cloud platform to handle peak loads.

🧭 Best Practices for Beginners

-

Start small with free tiers before scaling.

-

Always use version control for your models.

-

Enable logging and monitoring for debugging.

-

Keep data security and compliance in mind if handling sensitive data.

🔗 Useful Links – AiBlogQuest.com

-

Using LangChain to Build Autonomous Agents

-

Exploring Vector Databases for AI Apps

❓ FAQ

Q1: Do I need coding skills to deploy AI models in the cloud?

A1: Basic Python and cloud knowledge helps, but many platforms offer no-code/low-code solutions.

Q2: Which cloud provider is best for AI model deployment?

A2: AWS SageMaker is most popular, but Google Cloud is excellent for TensorFlow, and Azure ML works well for enterprises.

Q3: How much does it cost to deploy AI models in the cloud?

A3: Costs vary depending on usage, but free tiers are available for small projects.

Q4: Can I update my AI model after deployment?

A4: Yes, you can re-train and redeploy updated versions without downtime.