🔍 Explainable AI (XAI): What It Means and Why It Matters in 2025

What is Explainable AI (XAI)? Learn how XAI makes AI decisions more transparent, trustworthy, and understandable for real-world applications—by AiBlogQuest.com.

🤖 Introduction: Why We Need Explainable AI

AI models can do amazing things—from diagnosing diseases to approving loans. But there’s a problem: we often don’t understand how they make decisions.

That’s where Explainable AI (XAI) comes in.

At AiBlogQuest.com, we break down what XAI really means, why it’s crucial, and how it brings transparency, trust, and accountability to the world of artificial intelligence.

💡 What Is Explainable AI (XAI)?

Explainable AI refers to systems that make AI decisions understandable to humans.

It focuses on answering questions like:

-

Why did the AI make that decision?

-

Can we trust this outcome?

-

What influenced the result?

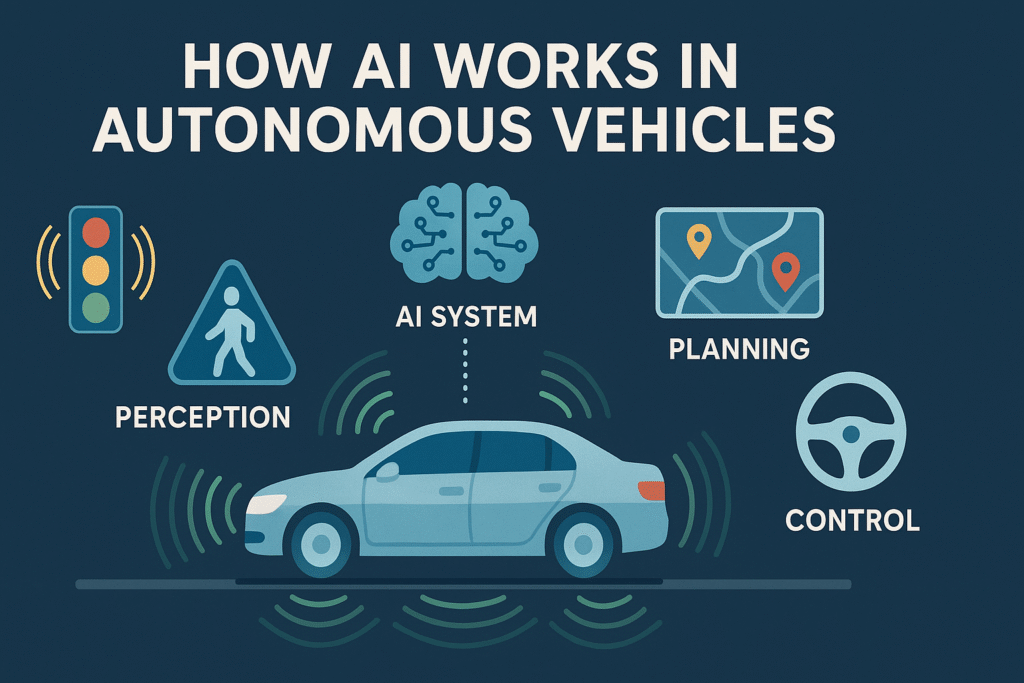

XAI is especially important in high-stakes fields like:

-

Healthcare

-

Finance

-

Law enforcement

-

Autonomous vehicles

⚙️ How Explainable AI Works

Explainable AI adds a layer of logic or visualization on top of complex models like neural networks.

Here are two common types of XAI techniques:

| Type | Description |

|---|---|

| Model-specific | Tools designed for certain models (e.g., decision trees, logistic regression) |

| Model-agnostic | Works with any black-box model (e.g., LIME, SHAP) |

🧰 Popular XAI Tools and Frameworks

-

LIME (Local Interpretable Model-agnostic Explanations)

-

SHAP (SHapley Additive exPlanations)

-

Google’s What-If Tool

-

IBM AI Explainability 360

These tools help users understand feature importance, decision boundaries, and alternative outcomes.

🧠 Why Explainable AI Matters

1. 🚨 Trust & Accountability

If an AI denies a loan or flags someone as high-risk, the user has the right to know why. XAI builds user trust by offering clear explanations.

2. 📜 Legal & Ethical Compliance

Regulations like the EU AI Act and GDPR now demand AI systems be transparent and auditable.

XAI helps organizations stay compliant by making decisions traceable.

3. 🧪 Debugging and Model Improvement

XAI allows developers to:

-

Spot biased training data

-

Understand performance issues

-

Improve model fairness and accuracy

4. 🏥 Human-AI Collaboration

In healthcare, XAI helps doctors validate AI diagnoses and make better-informed treatment decisions.

🔗 Useful Links

🌐 Resources

❓ FAQ – Explainable AI (XAI)

Q1: Is XAI only needed in regulated industries?

No. While crucial in finance and healthcare, XAI benefits every AI application by improving trust and transparency.

Q2: Can XAI be applied to deep learning models?

Yes. Tools like SHAP and LIME make even complex models interpretable.

Q3: Does XAI reduce accuracy?

Not necessarily. In fact, understanding a model often helps improve performance and remove bias.

🏁 Final Thoughts

Explainable AI isn’t just a trend—it’s a necessity. In a world where AI decisions shape lives, XAI ensures that we can understand, trust, and improve the systems we rely on.

For more human-friendly AI breakdowns, follow AiBlogQuest.com—where smart meets simple.

🏷️ Tags:

Explainable AI, XAI Tools, Interpretable AI Models, AI Transparency, Ethical AI, AiBlogQuest